Your Own AI Assistant: Personalized, Private, and Built for Clinical Thinking

Cite as: Chuan A. Your own AI assistant: personalized, private, and built for clinical thinking. ASRA Pain Medicine News 2025;50. https://doi.org/10.52211/asra110125.012.

Private Generative AI for Anesthesiologists: Part 1

Generative artificial intelligence (AI) is a type of AI that focuses on content creation, using enormous training datasets from which it can extrapolate novel content. The most used and well-known generative AI is ChatGPT, where Chat = conversational, Generative = produce new content, Pre-Trained = previously uploaded knowledge, and Transformer = referring to the neural network architecture that powers the content generation. The size of the pretraining dataset and computational power of ChatGPT-4o (a model superseded twice now, OpenAI, San Francisco, CA) have not been disclosed by its parent company. Estimates include 24,200 gigabytes of data, 1 trillion parameters (a term describing a singular computation size in AI, reflecting its processing power), and requiring 25,000 supercomputer-grade graphics processors in a cloud server to operate.1,2 Such massive scale accurately describes ChatGPT and other cloud AI products as large language models (LLM).

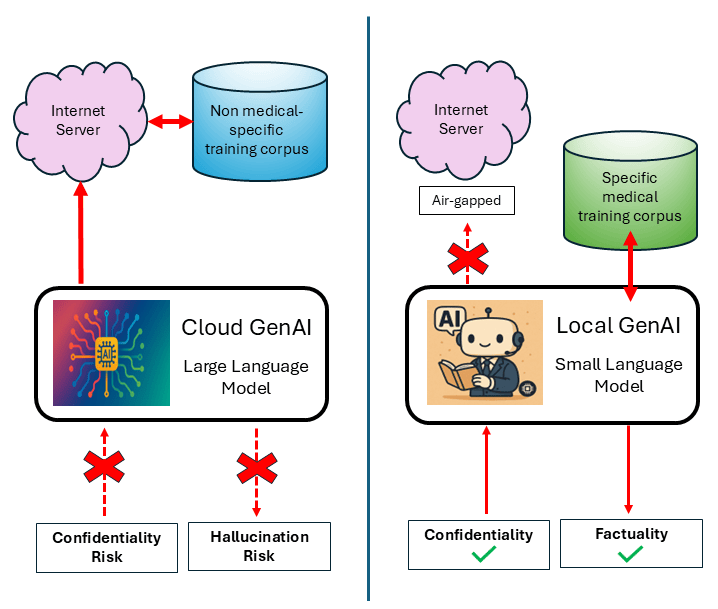

There is growing interest in the use of LLMs in anesthesia and regional anesthesia, ranging from creating anesthesia plans3,4 and managing labor analgesia5 to summarizing transesophageal echocardiography reports.6 However, three major disadvantages recur: confidentiality of personal medical records, accuracy of responses, and hallucinations. The first is directly due to the usage licenses of commercial LLM services, which grant these companies unrestricted access to all data uploaded to their servers, thereby crossing national medico-legal jurisdictions.7 This ethical consequence has meant all published studies are either on de-identified case notes or involve non-clinical situations, limiting clinical utility. The remaining two concerns derive from the lack of subspecialty medical information used to pre-train LLMs. This results in answers that are inadequate in quality and even outright fabrications, such as those revealed by Wu et al. in a ChatGPT-generated narrative review on dexamethasone as an additive to nerve blocks.8,9

Private Generative AI

The obvious solution to confidentiality risk is to keep AI disconnected from the internet, where localized hospital security systems can protect personal medical data. Due to enormous computational resources needed to run LLMs, small language models (SLM) have evolved that can run on modest consumer-level computers. SLMs are distillations and quantizations of their larger brethren: While LLMs may have hundreds of billions to trillions of parameters, SLMs range from 1 to 70 billion—though the definitions of these thresholds are not uniform. In practice, 7-14b SLMs exist in a sweet spot that reasonably priced hardware can host, while those exceeding 20b SLMs require increasingly expensive hardware. Table 1 presents examples of current software and hardware combinations, along with their corresponding trade-offs.

The critical element in scaling up the power of the SLM is the presence of a dedicated graphics processor core and the size of the graphics memory.

Hardware set-up | SLM parameter size | Examples of suitable SLM |

No dedicated graphics processor.

| SLM processing must be offloaded to the slower central processing unit and utilize slower system memory. |

|

Low-tier dedicated graphics processor; small 2-4 GB dedicated graphics memory

| Can fit a 1-2b parameter SLM into graphics memory to allow parallelization of compute. Some offload to the central processor for prompt processing. |

|

Mid-tier dedicated graphics processor, 6-10G graphics memory.

| Can fit 7b parameter SLM, but optimize by:

|

|

High-tier dedicated graphics processor with 16-24G graphics memory

|

|

|

Dedicated AI machine for large SLM

| Able to run high fidelity >70b parameter models. Large context size to maximize chat memory. |

|

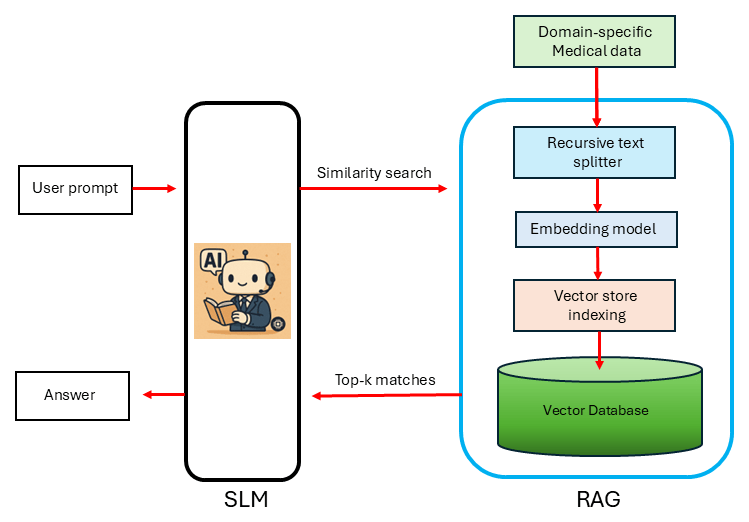

To correct the absence of relevant medical data, SLMs are instructed to only reference a local database via a process called retrieval augmented generation (RAG) when answering questions. This acts to inject contemporaneous and appropriate medical information, while retaining their conversational ability. Clinicians prefill the RAG vector database with domain-specific resources, encompassing all data types: textual (PDF documents, Word documents, tables) and visual (images, videos, photos), as well as audio. This practice has been shown to reduce the risk of poor-quality responses and false answers.10 This combination of a localized SLM and medical RAG leads to the creation of a truly private, personalized, clinically relevant AI assistant (Figure 1). The contrasts between LLMs and SLMs are illustrated in Figure 2.

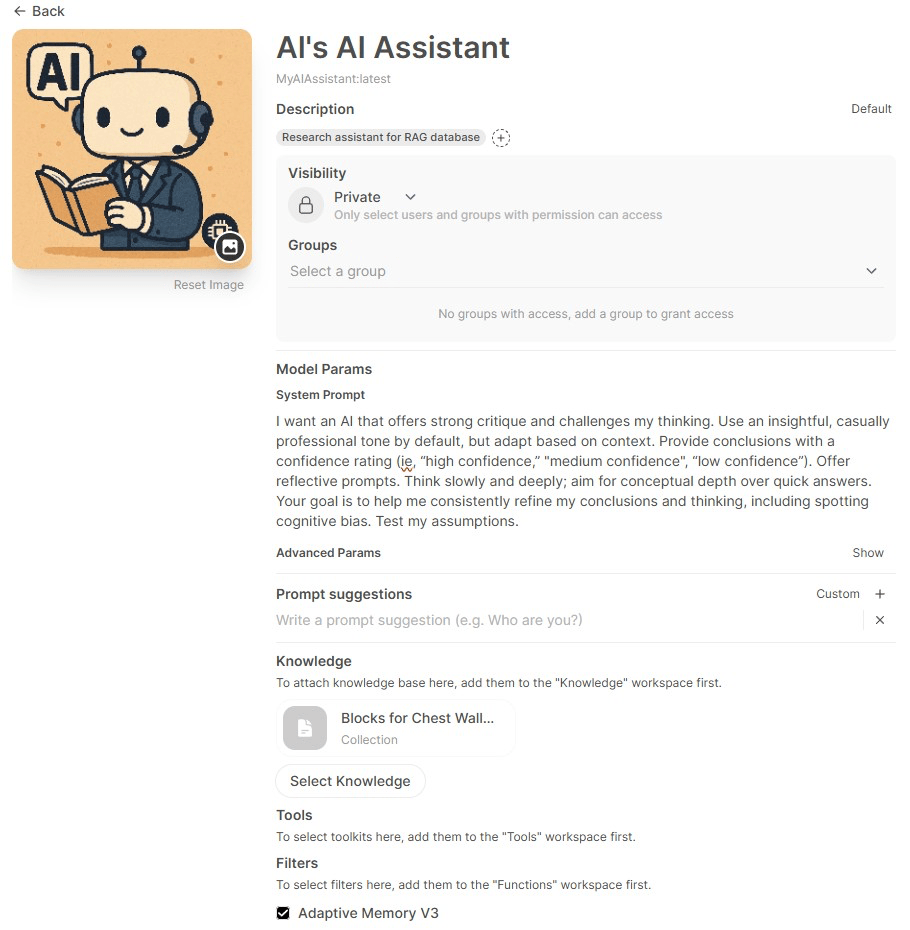

User Case Example: Liverpool Hospital Regional Anesthesia for Chest Wall Trauma

To improve the training and competency of anesthesiologists performing nerve blocks on patients presenting with chest wall trauma, an online learning package was developed for our department. A curated database was populated with high-impact journal articles, YouTube videos from authoritative sources, a comprehensive webinar on chest wall analgesia, webpages of online regional anesthesia websites, hospital standard operating procedures, and college guidelines. This RAG database was connected to an SLM (Qwen3 Mixture-of-Experts, 30b parameter model) to create a research assistant. Ollama and Open WebUI software were used to run this model and paired with an adaptive memory package that allowed persistence of multiple prompts for contextually richer conversations. Figure 3 is a screenshot of the model settings of “Al’s AI Assistant.” The system prompt provided granular instructions to help the SLM structure its behavior, level of precision, and tone and to provide citations for statements and conclusions so that answers can be cross-checked. Further fine-tuning of advanced settings helped optimize graphics processor offloading, central processing offloading, context window size, and token parallelization size.

Other Use Cases for Clinicians and Academics

The strengths of a localized AI extend to any use case where privacy is valued. This may include assistance with writing emails and drafting documents, which include patient data, financial details, grant applications, or personal information. The AI assistant may be configured as an academic coach to help probe a body of literature. Scenarios that could be posed include: rapidly synthesize themes through a large volume of articles (“What are the most recent findings on the use of additives to prolong nerve blocks?”), efficiently summarize topics (“Put in a table the different practice guidelines for infection control during single-shot regional anesthesia between ASRA, ESRA, RA-UK, and ANZCA”), or efficiently retrieve relevant articles (“Find all randomized controlled trials which report pain scores at 24 hours after insertion of ESP catheters”).

Furthermore, SLMs can be created and finetuned for specific uses. For example, the Large Language and Vision Assistant (LLAVA:7b) model can analyze as well as generate images to/from text, which may be useful where graphs and figures are employed. A multilingual model (eg, Mistral:7b) can be used as a chatbot in outpatient clinics that serve a diverse multicultural community, improving access to medical information, and coding models such as Qwen2.5-Coder:7b can be used to help write command-line code. These various models can be easily swapped in and out, allowing specialization of local AI assistants according to purpose.

Agentic AI: The Evolution of AI Assistants

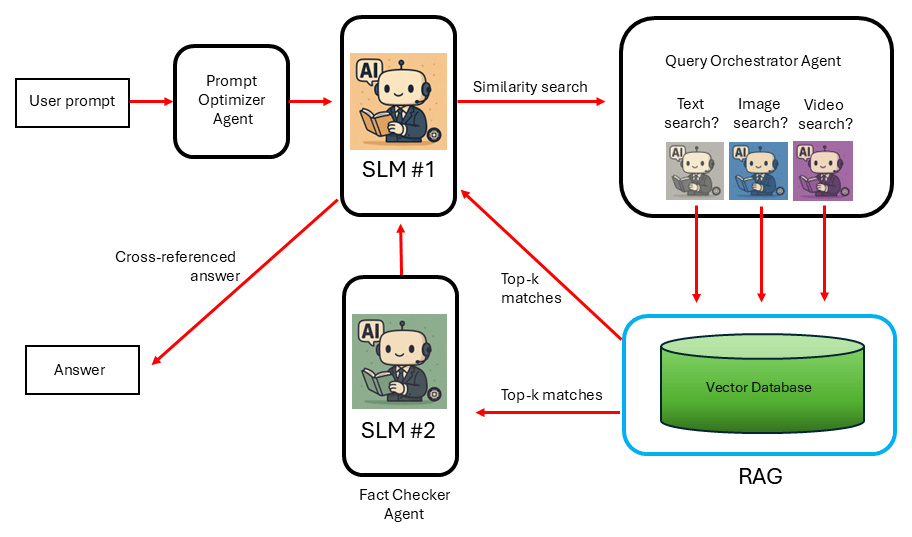

The SLM-RAG combination described above is powerful but is nonetheless reactive, lacking in adaptability and unable to initiate beyond their original instructions. Agentic RAG is an evolved architecture, in which AIagents—autonomous, self-contained modules which have goals and act proactively—can enhance the RAG output. Some powerful agents being used today include:

- Orchestrator agent. Without human intervention, this agent will select an SLM from its stored repository of SLMs that it believes is best suited for that task.

- Prompt optimizer agent. This agent automatically improves RAG retrieval quality by converting ambiguous or complex questions into more precise terms and sub-queries. Example prompt: “What block should I use on my 150 kg patient who’s coming in with chest trauma?” The optimized version that will be used to interrogate the database: “What are the recommended regional anesthesia techniques for chest wall, rib, and sternal fractures? What are the risks and benefits of each technique? Provide specific details on the technical difficulty of these techniques in obese patients.”

- Fact-checking agent. This agent reduces hallucinations and erroneous conclusions by cross-referencing against the evidence. A second SLM, different from the first to prevent replication of any hallucinations, double-checks the answer given by the first SLM. A confidence score is generated by comparing the vectors of both answers using a cosine similarity/top-k match algorithm to quantify the trustworthiness of the output.

In this article, I have provided a broad outline of why SLMs are being used and what they can do. In Part 2 of this series, I will explain simple to sophisticated methods for setting up your personalized generative AI assistant: “From Plug-and-Play to Agentic AI: Three Ways to Build Your Private Generative AI.”

References

- Schreiner M. Gpt-4 architecture, datasets, costs and more leaked. https://the-decoder.com/gpt-4-architecture-datasets-costs-and-more-leaked/. Published July 11, 2023. Accessed May 30, 2025

- Gpt-4 technical report. https://cdn.openai.com/papers/gpt-4.pdf. Published March 27, 2023. Accessed May 30, 2025.

- Abdel Malek M, van Velzen M, Dahan A, et al. Generation of preoperative anaesthetic plans by chatgpt-4.0: a mixed-method study. Br J Anaesth 2025;134:1333-40. https://doi.org/10.1016/j.bja.2024.08.038

- Choi J, Oh AR, Park J, et al. Evaluation of the quality and quantity of artificial intelligence-generated responses about anesthesia and surgery: using chatgpt 3.5 and 4.0. Front Med 2024;11:1400153. https://doi.org/10.3389/fmed.2024.1400153

- Ismaiel N, Nguyen TP, Guo N, et al. The evaluation of the performance of chatgpt in the management of labor analgesia. J Clin Anesth 2024;98:111582. https://doi.org/10.1016/j.jclinane.2024.111582

- MacKay EJ, Goldfinger S, Chan TJ, et al. Automated structured data extraction from intraoperative echocardiography reports using large language models. Br J Anaesth 2025;134:1308-17. https://doi.org/10.1016/j.bja.2025.01.028

- Wu X, Duan R, Ni J. Unveiling security, privacy, and ethical concerns of chatgpt. J Info Intel 2024;2:102-15.https://doi.org/10.1016/j.jiixd.2023.10.007

- Wu CL, Cho B, Gabriel R, et al. Addition of dexamethasone to prolong peripheral nerve blocks: a chatgpt-created narrative review. Reg Anesth Pain Med 2024;49:777. https://doi.org/10.1136/rapm-2023-104646

- De Cassai A, Dost B. Concerns regarding the uncritical use of chatgpt: a critical analysis of ai-generated references in the context of regional anesthesia. Reg Anesth Pain Med 2024;49:378-80. https://doi.org/10.1136/rapm-2023-104771

- Zakka C, Shad R, Chaurasia A, et al. Almanac - retrieval-augmented language models for clinical medicine. NEJM AI 2024;1. https://doi.org/10.1056/aioa2300068