From Plug-and-Play to Agentic AI: Three Ways to Build Your Private Generative AI

Cite as:

Private Generative AI for Anesthesiologists: Part 2

In the November 2025 newsletter, we discussed the advantages and disadvantages of large language models (LLMs) such as ChatGPT, versus small language models (SLMs). An SLM connected to a retrieval-augmented generation (RAG) database, populated with curated regional anesthesia resources, was used as a case study. In the second part of this series, we will explore how to set up your personalized generative AI assistant. Three methods will be discussed: starting with plug-and-play (easiest to set up, but with use limitations); intermediate (needing more configuration, but can integrate RAG); and advanced agentic AI (most complex, but harnesses the full power of AI capability). Table 1 provides an easy reference guide to the technical terms used in this article.

Token | The smallest unit processed by a language model. It varies in character size depending on its implied significance. A single word of English may be composed of several tokens. |

Parameter | The weight and bias given to tokens by a language model during pretraining. As a crude generalization, the higher the parameter size of an SLM, the more accurately it can perform accurate responses. However, more efficient pretraining can enable lower-parameter SLMs to perform as well as large SLMs and even compete with massive LLMs. Parameters are uploaded into graphics memory when models are loaded, which immediately restricts speed. |

Quantization | One of several techniques to reduce the compute resources needed to run an SLM. High precision (eg, FP16, Floating point 16-bit) parameters are reduced to lower precisions, such as 8-bit (Q8) and 4-bit (Q4). |

GPT Generated Unified File Format (GGUF) and Apple Machine Learning Xchange (MLX) | Another technique used to reduce compute cost of SLMs. Models are split to offload work to both the graphics and central processors. This parallelization attempts to maximally utilize compute resources while avoiding bottlenecks. GGUF models are for Windows-based systems, while MLX models are for Apple Silicon-based systems. |

Mixture of Experts (MOE) | Another method to reduce per-token compute cost. The model is divided into expert sub-networks that specialize in different domains. All parameters of a SLM are loaded into memory, but only a few experts are executed per token during the user query. This helps optimize efficiency without sacrificing too much performance. |

AI Agent | A self-contained software program that is given autonomy to use available tools to proactively achieve its goals. An example of an AI agent would be a fact-checker program that uses a language model to cross-reference answers provided by another language model to reduce risk of hallucinations. |

Necessary Hardware and Software

The combination of SLM and computing hardware is bound to a triad of compromise: speed, accuracy, and compute cost. An SLM may have speed and accuracy, but the user will incur a cost in the form of more expensive high-end hardware. You can emphasize lower compute cost, but to maintain responsiveness, you will expect low-quality answers. In contrast, if you choose low compute cost and high quality output, the response time to user questions may be impractically slow.

My approach is to fix the lowest acceptable SLM response rate, which for this author, is four tokens/second, which is equivalent to the adult silent reading speed of approximately three words/second. Compute cost is directly related to the financial budget. A mid-tier Windows-based desktop would consist of a multicore multithreaded central processor with 32 gigabyte system memory and an Nvidia or AMD graphics processor with at least 8 gigabytes of video memory. These are often described as “gaming” desktops/laptops due to the presence of a discrete graphics core and memory to run high-resolution computer games; coincidentally, AI software is also heavily reliant on this hardware. An equivalent Apple Silicon machine would be an M3 or M4 chip with specialized graphics cores and 64G of unified memory. Higher-end hardware quickly becomes very expensive, but is necessary to run faster and larger SLMs with large RAG databases.

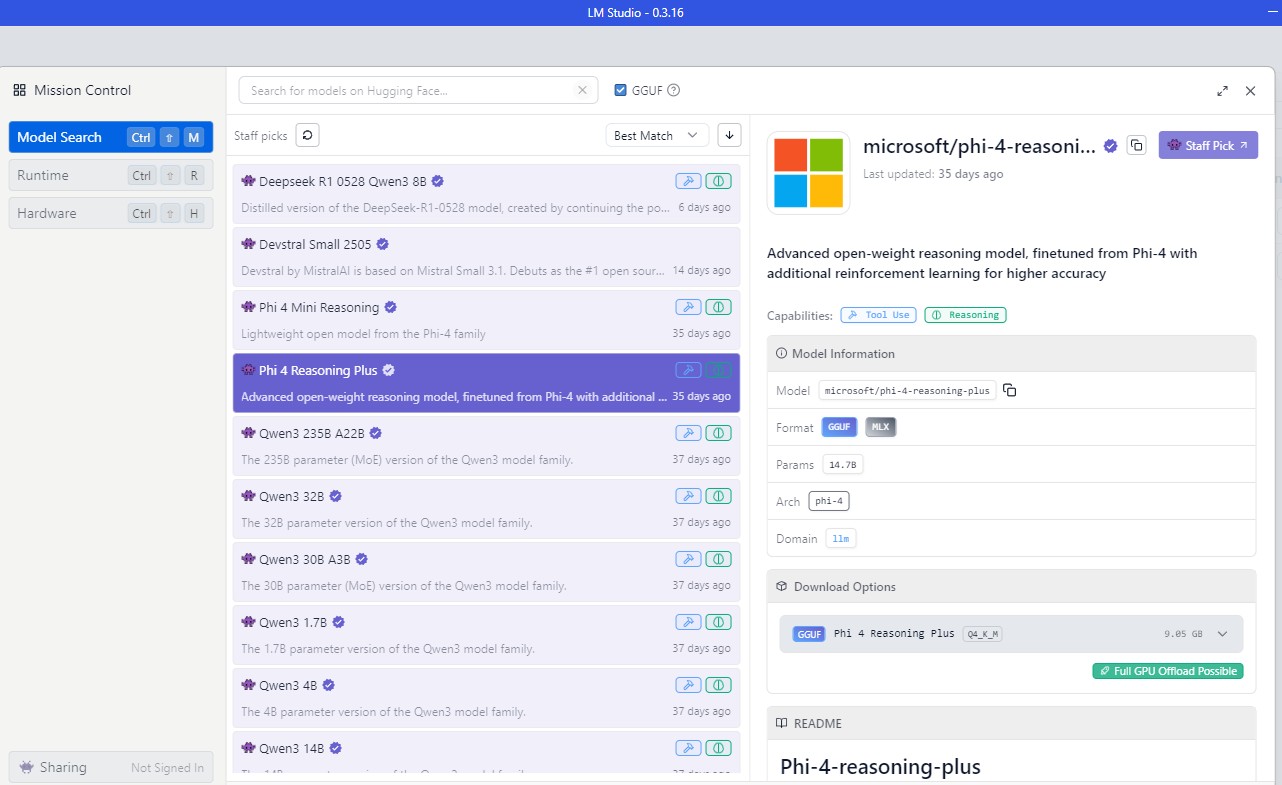

Finally, choose an SLM that is most suitable for the AI task from the Hugging Face repository (https://huggingface.co/),1 taking into account the required speed and hardware constraints. Models are chosen based on parameter size, fine-tuning, intended use, quantization, GGUF and MLX parallelization, and open-source status, among other characteristics.

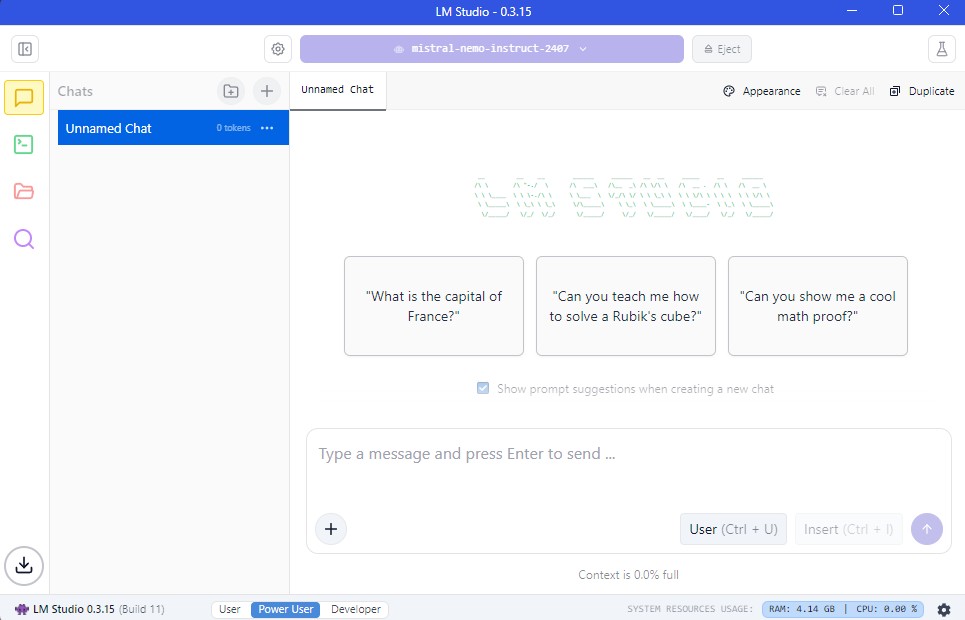

Method 1 Plug-and-Play: LM Studio

LM Studio is a downloadable desktop application that allows users to download, manage, and interact with SLMs. It offers the most user-friendly, graphically oriented environment. A familiar chatbox interface is easy for first-time users, who are uncomfortable with the command line (Figure 1). The application will automatically recommend SLMs for your hardware setup, including selecting an appropriate quantization and GGUF/MLX format, and download them without requiring manual searches through the Hugging Face repository (Figure 2). Multiple models can be stored locally to enable rapid switching between SLMs.

LM Studio has a limited capacity to engage with the user. All models are reliant on the quality of their pretraining, as LM Studio itself does not support advanced use cases such as RAG. Only individual documents may be uploaded into LM Studio. On the other hand, LM Studio can be integrated into a more sophisticated agentic AI network (see below). The download page for LM Studio is found here:https://lmstudio.ai.2

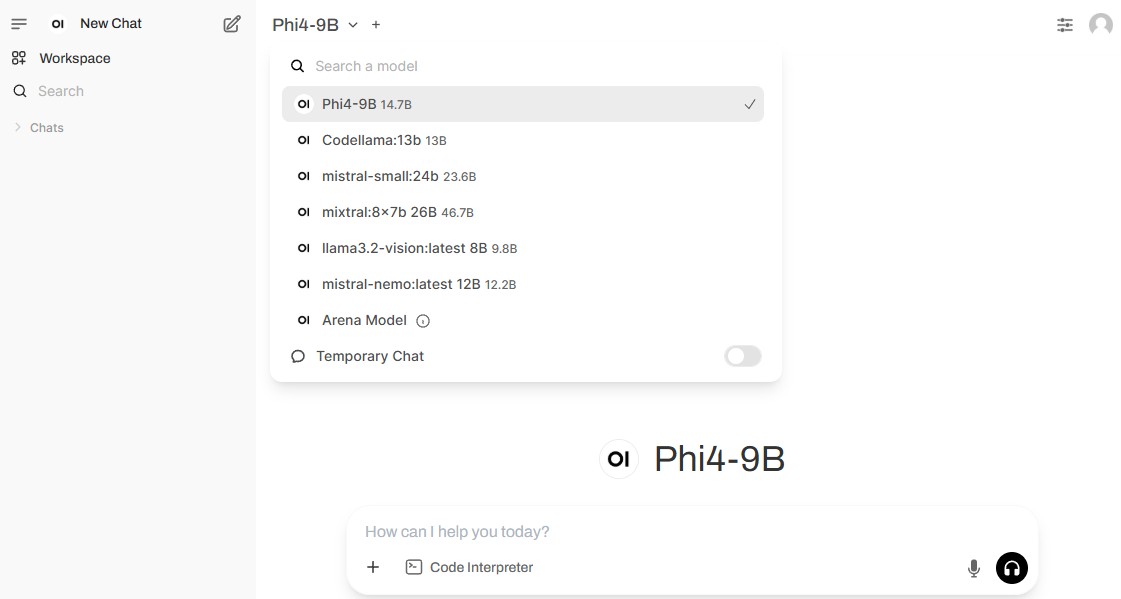

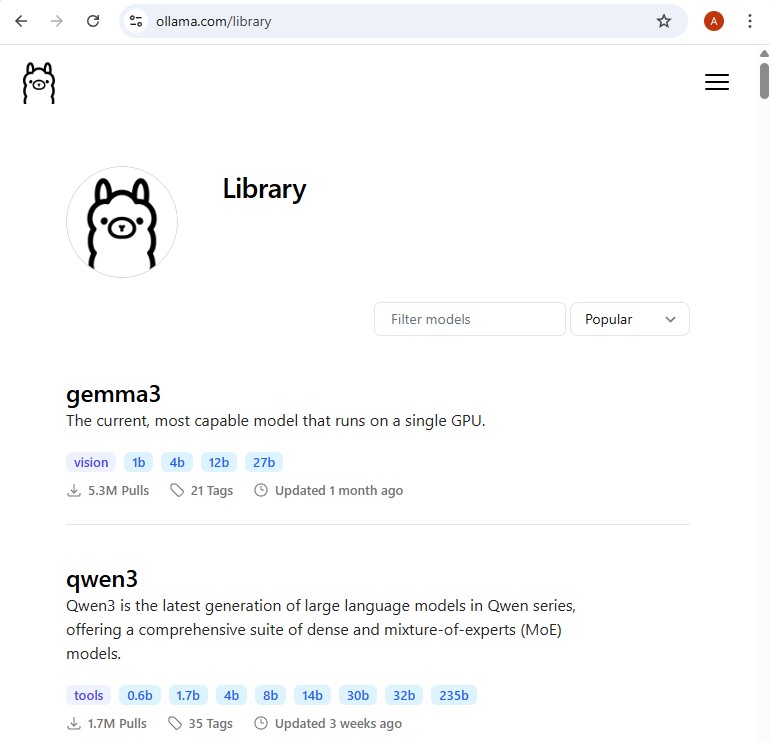

Method 2 Intermediate: Ollama with Open WebUI

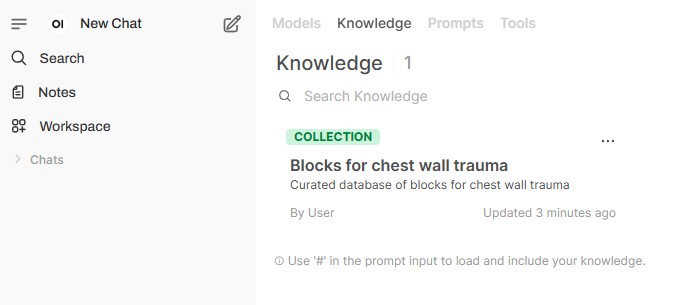

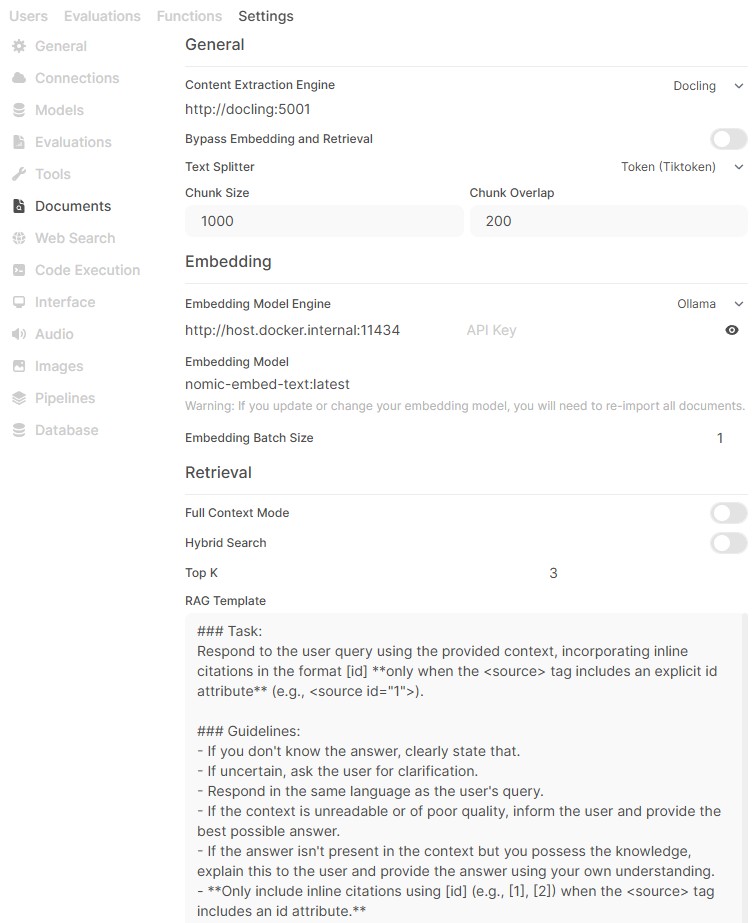

Ollama performs the same task as LM Studio, but with three significant advantages. First, it is a more efficient program that requires fewer hardware resources. More SLMs can be run, and SLMs can be larger and have larger parameter sizes than is possible with LM Studio. The Ollama command-line interface is often enhanced with a graphical user interface called Open WebUI (Figure 3). A library of compatible SLMs is available for search and download from the Ollama repository (Figure 4). Second, Ollama provides direct RAG integration with a vector database (Figure 5) and customization of the workflow, including selecting the specific recursive text splitter, embedding model, and tokenizer (Figure 6).

Third, Ollama integrates with external software via application programming interface (API) keys, significantly expanding its versatility. Some examples of API calls include a Google API key that enables internet searches to complement RAG queries and an API call to a different vector database.

The significant disadvantage of Ollama/Open WebUI is that the user needs to be comfortable with some coding using a command line and installation of a Linux subsystem, have an understanding of Docker containers, and possess a working knowledge of network protocols. The webpage for Ollama is https://ollama.com3, and Open WebUI is https://openwebui.com.4 There are help pages on the latter website to guide installation and customization of Ollama/Open WebUI.

Method 3 Advanced: Agentic AI

Until recently, AI software development required coding in Python, the primary programming language for artificial intelligence and machine learning. This made the development of AI applications the domain of experienced programmers. It took the introduction of intuitive visual tools such as Langflow (https://www.langflow.org) in 2024 to significantly reduce this steep learning curve.5

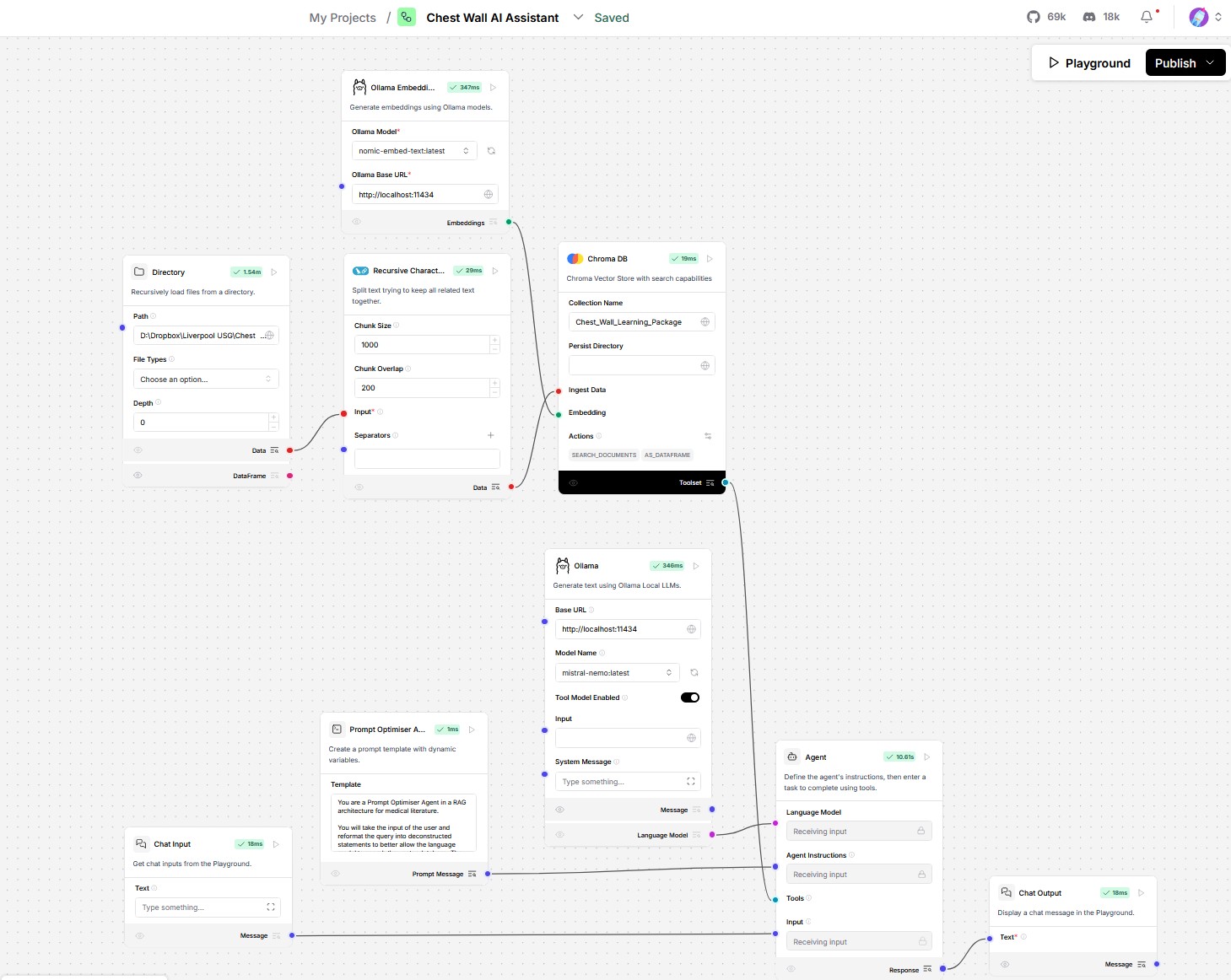

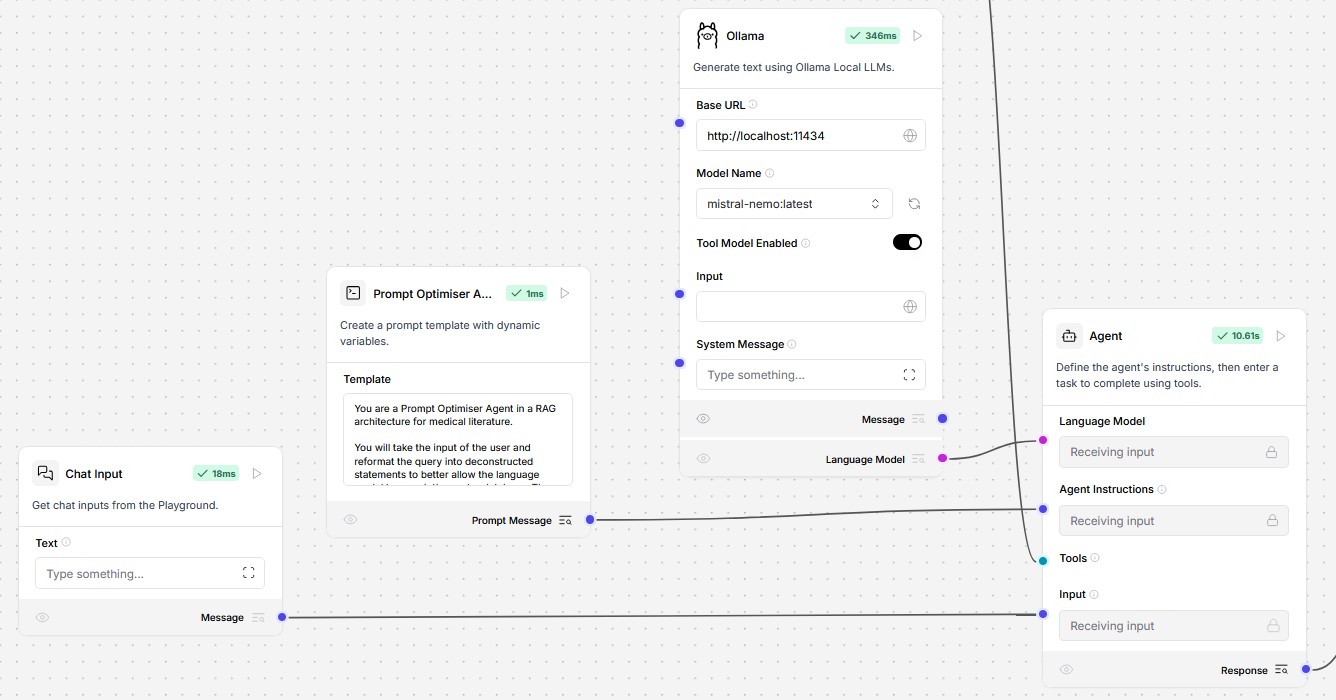

In Langflow, the underlying Python scripts are represented as graphical components linked via point-and-click data pipes. The necessary Python code is automatically generated without user intervention, thereby enabling the easy construction of agentic AI. Figure 7 is the Langflow workspace for the author’s personalized AI assistant connected to a RAG database of regional anesthesia. In the example shown, a prompt-optimizing AI agent takes the user’s initial question and transforms it into multiple, unambiguous, specific questions. The AI agent is powered by an SLM that can be executed in Ollama (Figure 8) or in LM Studio. This agent improves the overall system's quality by performing more precise queries on the RAG database.

Agentic AI tools are, therefore, agnostic to the underlying code or program and can integrate disparate resources. The pace of AI tool evolution is also expected to accelerate as more capabilities become available. For example, the Model Context Protocol was released in March 2025 and provides AI agents with greater flexibility for sharing data.

In the accompanying video, the three methods above are demonstrated using screen captures of the author’s computer setup (Windows PC, AMD Ryzen 9 multicore processor, Nvidia RTX 5080 with 16 GB of video memory).

In conclusion, we are living at a time when AI in medicine is rapidly becoming democratized. Until recently, the computer hardware required to run AI programs was prohibitively expensive. Similarly, writing AI was unrealistic unless determined and skilled. With both reduced hardware costs and the development of software tools that simplify coding, physicians can now realistically build personalized AI programs that improve clinical productivity.

References

- Hugging Face, Inc. New York, NY. Available at: https://huggingface.co. Accessed July 12, 2025.

- Element Labs, Inc. Brooklyn, NY. Available at: https://lmstudio.ai. Accessed July 12, 2025.

- Ollama. Palo Alto, CA. Available at: https://ollama.com. Accessed July 12, 2025.

- Open WebUI, Inc. San Francisco, CA. Available at: https://openwebui.com. Accessed July 12, 2025.

- DataStax. Santa Clara, CA. Available at: https://www.langflow.org. Accessed July 12, 2025.